Decoding the Numbers: How Data and Statistical Reasoning Transform Modern Decision-Making

Decoding the Numbers: How Data and Statistical Reasoning Transform Modern Decision-Making

In an era defined by data abundance, the ability to interpret raw statistics and extract meaningful patterns has become a cornerstone of informed action across industries, governance, and daily life. From algorithmic trading to public health policy, statistical reasoning underpins the logic behind pivotal decisions. The power of data lies not merely in its volume, but in the precision of insight it reveals—insights validated through rigorous methods of measurement, inference, and error control.

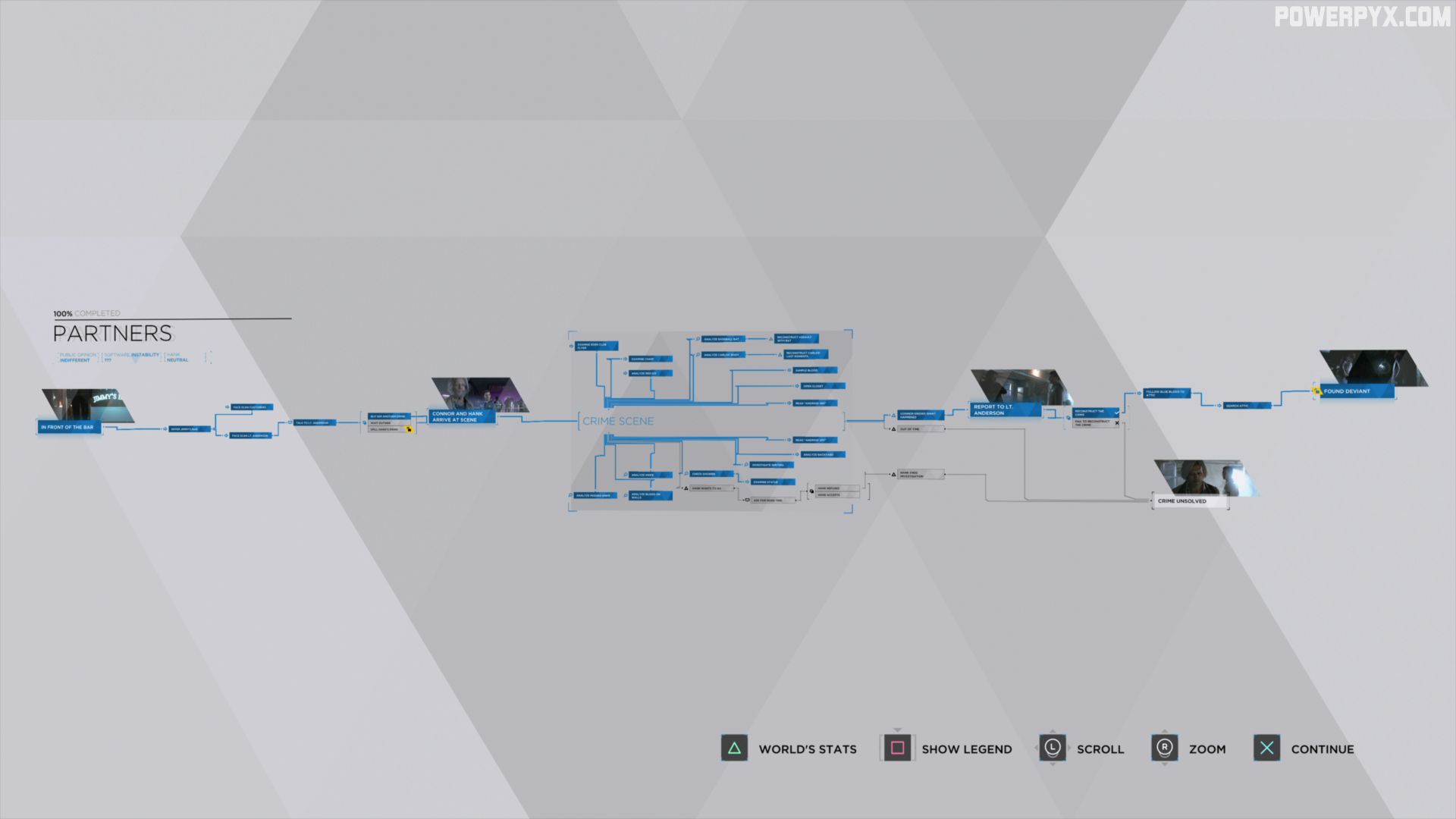

With over 2.5 quintillion bytes of data generated globally each day, distinguishing signal from noise demands more than intuition: it requires disciplined analytical frameworks rooted in sound statistics. Statistical reasoning provides the tools to transform data into knowledge. At its core, this discipline emphasizes four principles: descriptive summarization, inferential analysis, probabilistic forecasting, and causal inference.

Each plays a distinct role in turning numbers into narratives that guide action. Descriptive statistics condense massive datasets into digestible metrics—mean, median, and standard deviation, for instance—enabling quick comparisons. Inferential statistics, meanwhile, allow analysts to extrapolate findings from samples to populations, quantifying uncertainty through confidence intervals and p-values.

Probabilistic models project future trends under variability, crucial in fields ranging from climate science to finance. Finally, causal reasoning seeks to uncover true relationships, distinguishing correlation from causation—a persistent challenge in big-data ecosystems.

Consider the application of statistical reasoning in public health.

During the COVID-19 pandemic, real-time data modeling informed lockdown strategies and vaccine distribution. As the Institute for Health Metrics and Evaluation (IHME) repeatedly projected hospitalization rates using time-series models, public officials relied on statistical confidence intervals to assess worst-case scenarios. One key metric—R-number (basic reproduction number)—was derived from laboratory data and transmission dynamics, enabling dynamic policy adjustments.

Statistical analysis revealed not only the spread of infection but also the relative impact of non-pharmaceutical interventions, with estimates showing masks reducing transmission by up to 50%. These insights depended on data quality, random sampling methods, and careful adjustment for confounding variables—elements that elevate raw numbers into evidence-based urgency.

In business, statistical reasoning drives competitive advantage. Market research firms deploy logistic regression and cluster analysis to decode consumer behavior, segment audiences, and forecast demand.

Walmart, for example, uses predictive analytics to anticipate product needs, optimizing inventory with over 90% accuracy in high-traffic regions. Inventory decisions based on historical sales patterns, seasonal trends, and external variables such as weather or economic indicators reduce waste and boost margins. Retail giants apply A/B testing frameworks—grounded in hypothesis testing and significance levels—to optimize pricing, marketing campaigns, and user interface design.

A/B tests, when designed with proper power analysis and controlled for bias, yield statistically valid conclusions that outperform anecdotal evidence by orders of magnitude.

Financial markets epitomize the interplay between statistical rigor and economic outcomes. Algorithmic trading platforms leverage time-series modeling, Monte Carlo simulations, and volatility clustering to capture fleeting opportunities.

The Black-Scholes model, a cornerstone of derivatives pricing, relies on assumptions of normal distribution and risk-neutral valuation—principles that, despite known limitations, remain central for option pricing across global exchanges. Quantitative analysts, or quants, apply vector autoregression (VAR) models to infer interdependencies between macroeconomic indicators, enabling portfolio hedging with precision. Yet caution is imperative: statistical significance does not guarantee market relevance; overfitting models to historical data can lead to catastrophic losses.

The 2008 financial crisis underscored how flawed statistical assumptions—particularly in risk modeling—can cascade into systemic failure.

In science, statistical methodologies define the frontier of discovery. The Large Hadron Collider (LHC) at CERN processes petabytes of collision data annually, applying Bayesian inference to isolate rare events like Higgs boson detection.

With millions of background events per experiment, detection confidence requires sifting statistical significance above thresholds typically set at p < 0.05 or the stronger 10⁻⁷ level used in particle physics. Cross-validation and replication of results ensure robust conclusions, minimizing false positives. In genomics, genome-wide association studies (GWAS) scan millions of genetic variants across populations to identify markers linked to disease.

Each test incurs multiple comparisons, demanding correction via the Bonferroni adjustment or false discovery rate (FDR) control—strategies essential to prevent spurious associations in vast datasets.

The credibility of statistical reasoning hinges on transparency, reproducibility, and awareness of bias. Cognitive biases—such as confirmation bias or base rate neglect—can distort interpretation even when data is correct.

The infamous case of “p-hacking”—manipulating datasets until statistically significant results emerge—has spurred reforms toward pre-registration and open science. Initiatives like the Registered Reports model, embraced by top journals, require study designs and analyses to be disclosed before data collection, reducing selective reporting. Meanwhile, the replication crisis in psychology and medicine has reinforced the need for large sample sizes, peer review, and effect size reporting over mere p-values.

The evolution of big data and artificial intelligence has amplified both the opportunities and challenges of statistical inference. Machine learning models, though powerful, often trade interpretability for predictive accuracy, demanding new frameworks to quantify uncertainty. Techniques like bootstrapping, cross-validation, and probabilistic neural networks help recapture statistical rigor.

Ethical considerations now intersect with analytical practices: biased training data can perpetuate inequities in facial recognition, credit scoring, and hiring algorithms. Auditing models for fairness and transparency has become a practical necessity, not just a technical exercise. Statistical reasoning, therefore, extends beyond computation—it demands responsibility, contextual understanding, and ongoing scrutiny.

Across sectors, data driven decisions no longer rely on guesswork but on a foundation built through years of statistical innovation. From calibrating nuclear reactors using sensitivity analysis to personalizing education through adaptive learning models, the ability to reason statistically determines outcomes with measurable impact. With tools ranging from basic descriptive measures to advanced causal inference, professionals wield unprecedented power to shape policies, products, and progress.

Yet the true measure of success lies not in numbers alone, but in the wisdom behind them—the synthesis of data, context, and critical judgment that converts statistics into sound action.

Related Post

Argentina Casting: A Comprehensive Guide to the Dynamic Casting Industry in South America’s Cultural Powerhouse

Filtering Desire: How Japanese Cheating Movies Thrive on IMDb’s Hidden Corner

The Nucleus: The Command Center of Nervous Function

Danielle Colby in Playboy: Reinventing Confidence, Feminism, and the Modern Muse