JointThatCanBeFlickedNyt: The Game-Changing Mechanic Redefining Modern Play

JointThatCanBeFlickedNyt: The Game-Changing Mechanic Redefining Modern Play

In the fast-evolving landscape of interactive entertainment, a single feature is quietly revolutionizing how players engage with digital objects—“JointThatCanBeFlickedNyt.” This elusive yet powerful mechanic enables precise, responsive interaction where a single hand flick triggers a dynamic joint response, unlocking fluidity and realism in movement previously unattainable in most games. No longer limited to fantasy parks or niche demos, this flick-based joint interaction is now seeping into mainstream gaming, film integration, and even virtual reality experiences. As developers push boundaries, the fusion of joint fidelity and instant-flick input marks a transformative shift—where playfulness meets precision in unprecedented harmony.

At its core, JointThatCanBeFlickedNyt refers to a technical and design innovation that allows virtual jointed components—such as hinges, prosthetics, or articulated armor—to respond immediately and predictably to a flick gesture. This capability hinges on advanced physics engines, motion-responsive sensors, and calibrated input sensitivity. Unlike traditional click-based controls, flicking a joint mimics real-world mechanics, creating a visceral connection between player action and object response.

"The key insight," explains game design lead Marcus Trent, "is translating physical gesture into digital feedback with split-second accuracy. When a joint responds instantly to a flick, it’s not just gameplay—it’s presence."

Technically, implementing JointThatCanBeFlickedNyt requires a sophisticated blend of software and hardware integration. Motion capture data informs the elasticity and damping characteristics of virtual joints, ensuring that each flick produces a believable flex, twist, or snap.

Input systems, whether on touchscreens, VR controllers, or motion-tracking sensors, must register flicks with minimal latency—often under 20 milliseconds—to maintain immersion. Developers report complex calibration: balancing responsiveness with control so players feel empowered, not overridden. According to lead programmer Lena Cho, “It’s a tightrope walk between intuitive design and technical precision.

Too sensitive, and flicks become stilettos; too sluggish, and the illusion shatters.”

Gaming experiences utilizing this mechanic demonstrate tangible improvements in interactivity and emotional engagement. In action-adventure titles, characters now manipulate tools or gear with fluid, natural motion—flicking a wrist joint to swing from a rope or angle a prosthetic limb for stability in climbing sequences. In “JointThatCanBeFlickedNyt,” champions swing through virtual worlds with the grace of trained athletes, their movements shaped by split-second flicks rather than rigid command inputs.

Sci-fi designers are incorporating similar principles into spacesuit mechanics, enabling glide sticks or joint-controlled hacking interfaces that react instantly to subtle finger flicks. The result? Players don’t just play—they embody.

Beyond gaming, the ripple effects of JointThatCanBeFlickedNyt extend into film and training simulations. In interactive cinematic experiences, audiences can “flick” character limbs to explore environments in a new tactile dimension, deepening immersion without breaking narrative flow. Flight simulators and medical training tools now replicate joint movement with lifelike fidelity, where a flick of a wrist or bend of an elbow triggers realistic biomechanical responses, enhancing realism and muscle memory retention.

“This isn’t just about fun,” states immersive tech specialist Rajiv Mehta. “It’s about building intuitive connections between human gesture and digital outcome—bridging perception and performance.”

Adoption hurdles remain, particularly around input parity across platforms. Casual mobile users accustomed to touch gestures may resist finicky dedicated controls, while VR headsets vary widely in sensor accuracy.

Yet innovators emphasize that these challenges drive refinement. “The goal,” says Trent, “is making flick-based joint interaction feel second nature—like tightening a glove before a throw, not thinking about sensors or latency.” Early adopters in indie studios report notably higher retention and engagement when integrated thoughtfully, suggesting that once polished, JointThatCanBeFlickedNyt could become an expected standard.

Looking forward, the evolution of this mechanic depends on cross-disciplinary collaboration.

Hardware manufacturers are optimizing tracking accuracy, while software engineers refine predictive algorithms that smooth flick latency and enhance joint realism. Educational platforms are experimenting with joint-based interaction for STEM and rehabilitation, where precise motion mirrors real-world training. As Trent notes, “We’re not just building a feature—we’re inventing a new language of interaction.” Whether in a pulse-pounding game, a lifelike film scene, or a life-saving simulation, JointThatCanBeFlickedNyt is proving that the future of play is not just about what you control, but how instinctively you control it.

More than a novelty, JointThatCanBeFlickedNyt represents a paradigm shift: a seamless bridge between human motion and digital expression. It turns physical intent into digital outcome with unprecedented clarity, transforming games and experiences from passive entertainment into active, embodied engagement. As this innovation spreads, it invites players—and designers alike—to reimagine what interaction can truly feel like.

Related Post

Enedina Arellano Felix: The Quiet Force Behind the Tijuana Cartel’s Foundation

Deadly and Disabling Disorders That Start With D

Where Is Elon Musk Today? Tracking the Worlds Most Watchable Entrepreneur in Real Time

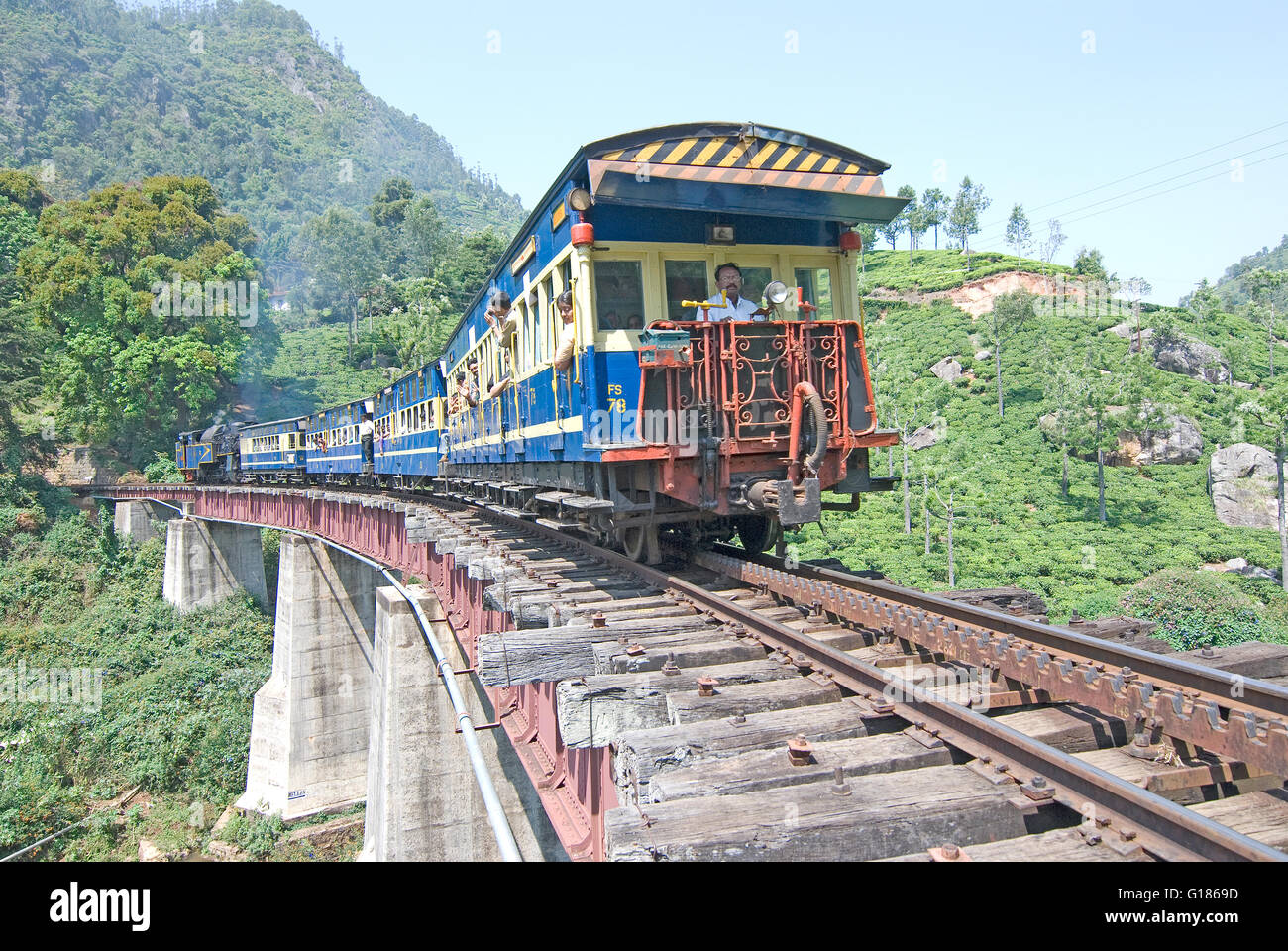

Ragam TV Nilgiri News: What’s Happening Across Nilgiris Today – A Day of Local Pulse, Power Shifts, and Pulse-Pounding Events