SubtractTheMeanFromTheDataPoint: The Quiet Revolution Transforming Data Analysis

SubtractTheMeanFromTheDataPoint: The Quiet Revolution Transforming Data Analysis

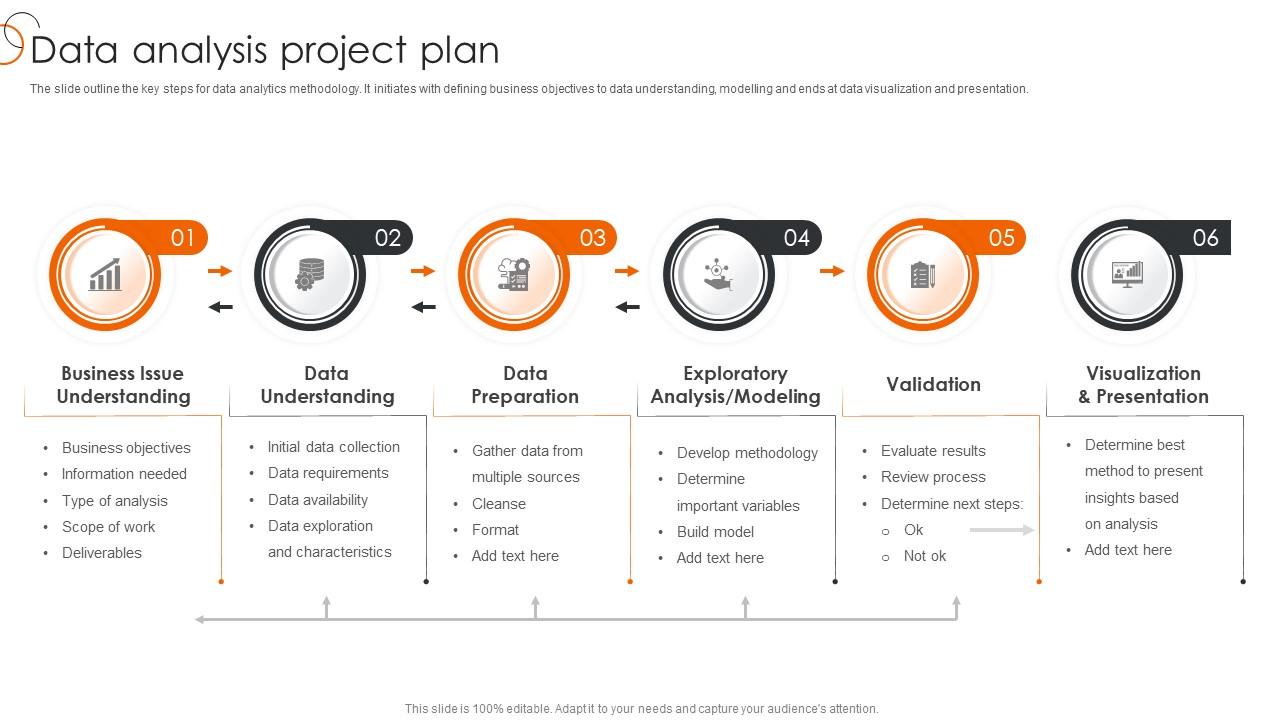

When analysts seek clarity in noise, a deceptively simple mathematical operation holds profound power: subtracting the mean from each data point. Known formally as z-scoring, but most widely recognized by the method name SubtractTheMeanFromTheDataPoint, this transformation reshapes raw values into standardized units, revealing patterns invisible to the naked eye. By centering data around zero and scaling spread by standard deviation, analysts unlock deeper insights into variation, outliers, and distribution—transforming chaotic datasets into comprehensible narratives.

At its core, SubtractTheMeanFromTheDataPoint means adjusting every observation by the average value of the dataset. Given a data set {x₁, x₂, ..., xₙ}, the transformation computes first the mean (μ) and then produces a new series: zᵢ = xᵢ – μ. The result?

Numbers now describe how many standard deviations each point lies from the center, not their raw magnitude. A value of 0 marks the mean, positive numbers sit to the right of center, negative to the left. This standardization turns disparate scales into a common language, enabling meaningful comparisons across experiments, surveys, or time series.

Why does this matter? Consider a healthcare study measuring blood pressure reductions across patients. One group shows consistently higher changes than another—not necessarily because their treatment worked better, but because their starting values differed widely.

By subtracting the mean from each reading, researchers eliminate scale bias and isolate true effect magnitude. “This isn’t just math—it’s a lens,” explains Dr. Elena Martinez, a biostatistician at the Institute for Data Science.

“Without centering, variation gets obscured. With it, we see genuine trends.” The method’s utility extends far beyond clinical research. In finance, analysts apply SubtractTheMeanFromTheDataPoint to assess stock returns relative to market averages, detecting which investments deviate predictably from norms.

In education, standardized test scores use the same principle to compare student performance across diverse curricula and demographics. Even machine learning models rely on this preprocessing step to improve convergence and performance—few algorithms need raw, unscaled data to train effectively.

To perform the operation, start by gathering your dataset.

Calculate the mean with precision: sum all values and divide by count. Then, subtract that mean from each individual value. For example, if the mean height of a sample is 170 cm and one measurement is 165 cm, the adjusted value is –5 cm.

This reveals how 5 cm short of average—context infinitely richer than namesakes like “positive” or “negative.” The distribution follows suit, with mean zero and variance approaching one when properly scaled.

Freaks of loudness or quiet? Outliers still exist—until you detect them. By shifting data to center, extreme values become immediately visible when noise masks their impact.

A z-score exceeding ±2 or ±3 alerts analysts to anomalies requiring investigation. “It’s not that outliers disappear,” says Jonathan Reed, data scientist at GlobalMetrics. “But they jump out, demanding scrutiny rather than blending in.” Standardization’s power lies in its simplicity and universality.

Unlike complex modeling, z-scores require only basic arithmetic and a mean—accessible to students, professionals, and systems alike. Yet its implications are profound. Standardized teams perform better under unified metrics; personalized medicine advances through aligned patient data; stock portfolios balance risk across shifting markets.

The formula is elegant: z = (x – μ)/σ, where σ is the standard deviation. Yet its implementation demands care—robust statistics guard against skewed datasets, and missing values disrupt computation. But when applied rigorously, SubtractTheMeanFromTheDataPoint remains a foundational pillar, quietly guiding clearer discovery across science, business, and beyond.

In an era overwhelmed by data, this technique endures as a beacon of precision.By tethering values to their collective center, analysts transform chaos into clarity—one subtracted mean at a time.

Related Post

Bill Melugin: The Dynamic Reporter Redefining Modern Journalism

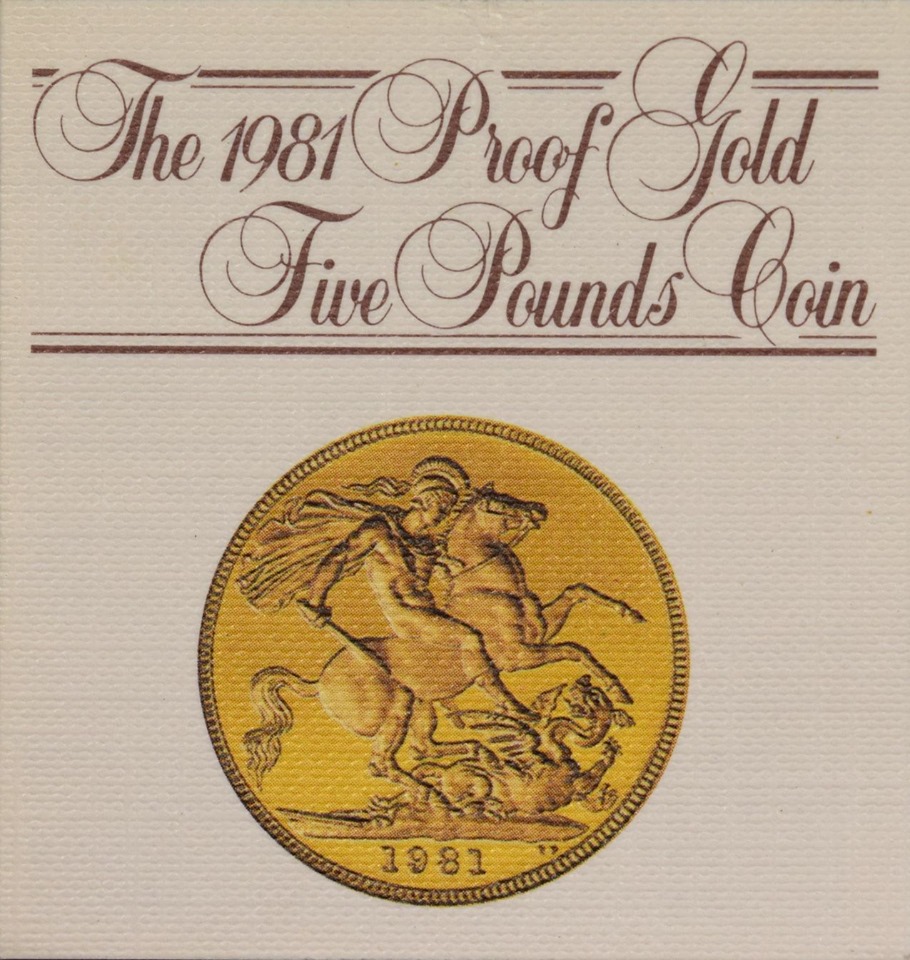

1981 Elizabeth II Coin: What’s Its True Collector Value?

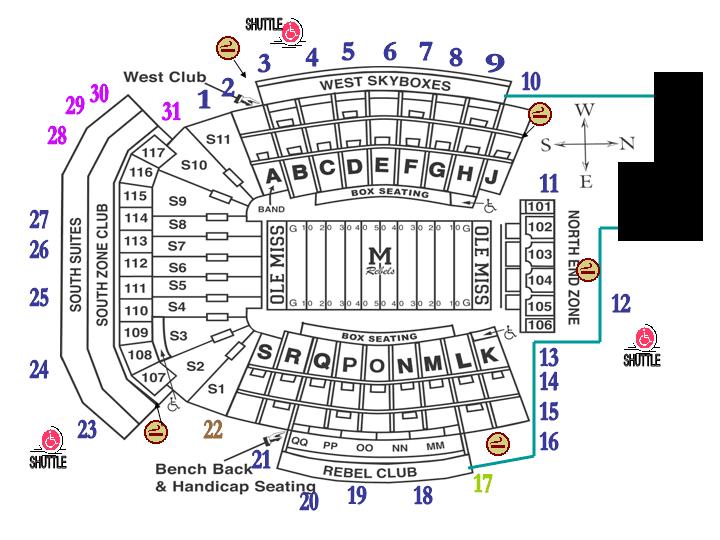

Vaught-Hemingway Stadium: Where Tradition Meets Modern Sport in Southeast Georgia

20 Stone to Pounds: Precision, Context, and Everyday Use Behind the Conversion