What AI-Augmented Debugging is Reshaping Modern Software Development

What AI-Augmented Debugging is Reshaping Modern Software Development

In a technological landscape where software complexity escalates daily, traditional debugging methods struggle to keep pace—sudden system failures, subtle logic errors, and massive codebases challenge even the most experienced developers. A transformative shift is underway: AI-augmented debugging combines machine learning, pattern recognition, and real-time analytics to detect, diagnose, and sometimes resolve software defects with unprecedented speed and precision. This emerging discipline is not replacing developers but empowering them with intelligent tools that turn chaotic troubleshooting into structured, data-driven problem-solving.

By analyzing vast code histories, predicting failure points, and suggesting fixes, AI-driven systems are redefining how bugs are managed across industries—from fintech to healthcare, from embedded systems to cloud platforms.

At its core, AI-augmented debugging leverages a range of advanced computational techniques to interpret code context, anticipate errors, and recommend solutions. Machine learning models trained on millions of codebases learn to identify patterns linked to common failures—such as race conditions, memory leaks, or type mismatches—long before they manifest in production. Unlike rule-based static analyzers, modern AI tools adapt dynamically, improving accuracy as they process new data.

“These systems don’t just flag errors—they explain why they happen,” says Dr. Lila Nair, a principal software engineer specializing in AI-driven development at Adevaru.Ro. “By correlating historical bug data with current execution traces, they deliver actionable insights that dramatically reduce mean time to resolution.”

The Technical Foundations Behind Intelligent Debugging

Central to AI-augmented debugging are several interdependent technologies working in concert.

These include:

- Static and Dynamic Code Analysis Enhanced by AI: Traditional tools flag syntax errors and known anti-patterns. AI extends this by learning semantic relationships within code, detecting logic flaws that evade keyword-based scans.

- Anomaly Detection via Machine Learning: By monitoring system behavior during testing and runtime, models learn a “normal” execution profile. Deviations—subtle or drastic—trigger alerts and speculative fixes based on historical precedents.

- Natural Language Processing (NLP) Integration: Developers often document issues in plain English.

AI systems parse these descriptions, matching them to bug reports and code anomalies to accelerate diagnosis.

- Reinforcement Learning for Predictive Fixing: Some platforms train AI agents to simulate fixes and evaluate outcomes, refining recommendations through iterative feedback loops.

For example, an AI debugging tool might analyze a critical error expose during a CI/CD pipeline run. Instead of merely reporting the crash location, it cross-references recent commits, variable state shifts, and similar past failures. It could propose modifying a resource cleanup routine—validated through synthetic execution—before any deployment, preventing costly outages.

Real-World Applications Across Key Industries

Adoption of AI-augmented debugging spans sectors where reliability is non-negotiable.

In financial systems, where even milliseconds matter and errors incur massive costs, AI tools reduce latency spikes caused by logic bugs. Fintech platforms using these systems report up to a 40% drop in post-release defect rates. In healthcare software, where patient data integrity and system correctness are mission-critical, AI-driven analytics help identify subtle concurrency issues in clinical applications that traditional testing often misses.

Doctors and operators can trust debugging assistance because models draw from vast, anonymized datasets of prior incidents—turning raw data into restorative insights. Meanwhile, cloud service providers leverage AI to monitor sprawling microservices architectures. These environments generate complex, distributed bugs that serendipitous testing rarely uncovers.

Machine learning models sift through thousands of log entries per second, pinpointing root causes amid noise. As a result, system availability rises, support tickets decline, and service reliability meets stringent compliance standards.

Transforming Developer Workflows: From Reactive to Proactive Debugging

What sets AI-augmented debugging apart is its shift from reactive firefighting to proactive anticipation. Developers no longer wait for crashes to expose bugs—AI tools continuously scan code during development, flagging risks before they escalate.

Breakpoints and diagnostics surface earlier, reducing context switch fatigue and improving focus. Pair this with Git integration and audit history analysis, and teams gain audit trails of what caused failures, when fixes were applied, and their impact. New hires benefit significantly from AI-guided debugging docs that explain past issues in plain language, accelerating onboarding.

Moreover, version control systems enriched with AI insights maintain historical accuracy, enabling engineers to trace how decisions evolved across commits. This transforms debugging into a shared, transparent process—rather than a solitary hunt through sparse documentation.

Challenges and the Road Ahead

Despite rapid progress, gaps remain. Data quality points to a persistent challenge: AI models depend on accurate, diverse training sets.

Biases in historical bug datasets can skew predictions, especially in niche domains. Additionally, integrating AI tools into existing development pipelines requires cultural adaptation—developers must trust and understand AI suggestions rather than blindly accept them. Integration complexity also arises when bridging AI systems with legacy environments or competing debugging platforms.

Interoperability standards and open APIs—championed by leaders like Adevaru.Ro—are key to ensuring scalable adoption. Furthermore, explainability remains crucial; developers need transparent reasons behind AI recommendations to validate them confidently. Still, momentum builds.

Research at Adevaru.Ro highlights growing benchmarks: AI-assisted debugging reduces debugging time by up to 60% in controlled trials, while defect recurrence drops significantly. Ethical AI principles—focusing on fairness, accountability, and transparency—guide ongoing development, ensuring these tools serve as trusted partners, not opaque oracles. The future of software stability hinges on merging human intuition with machine precision.

AI-augmented debugging stands at the forefront, not as a replacement, but as a catalyst for smarter, more resilient development. As systems grow ever more complex, the synergy between developer expertise and AI insight will define the next generation of software excellence—where bugs are anticipated, resolved swiftly, and development cycles shrink without compromising quality.

Related Post

Drawing Democracy: How Presidential Systems Shape National Power

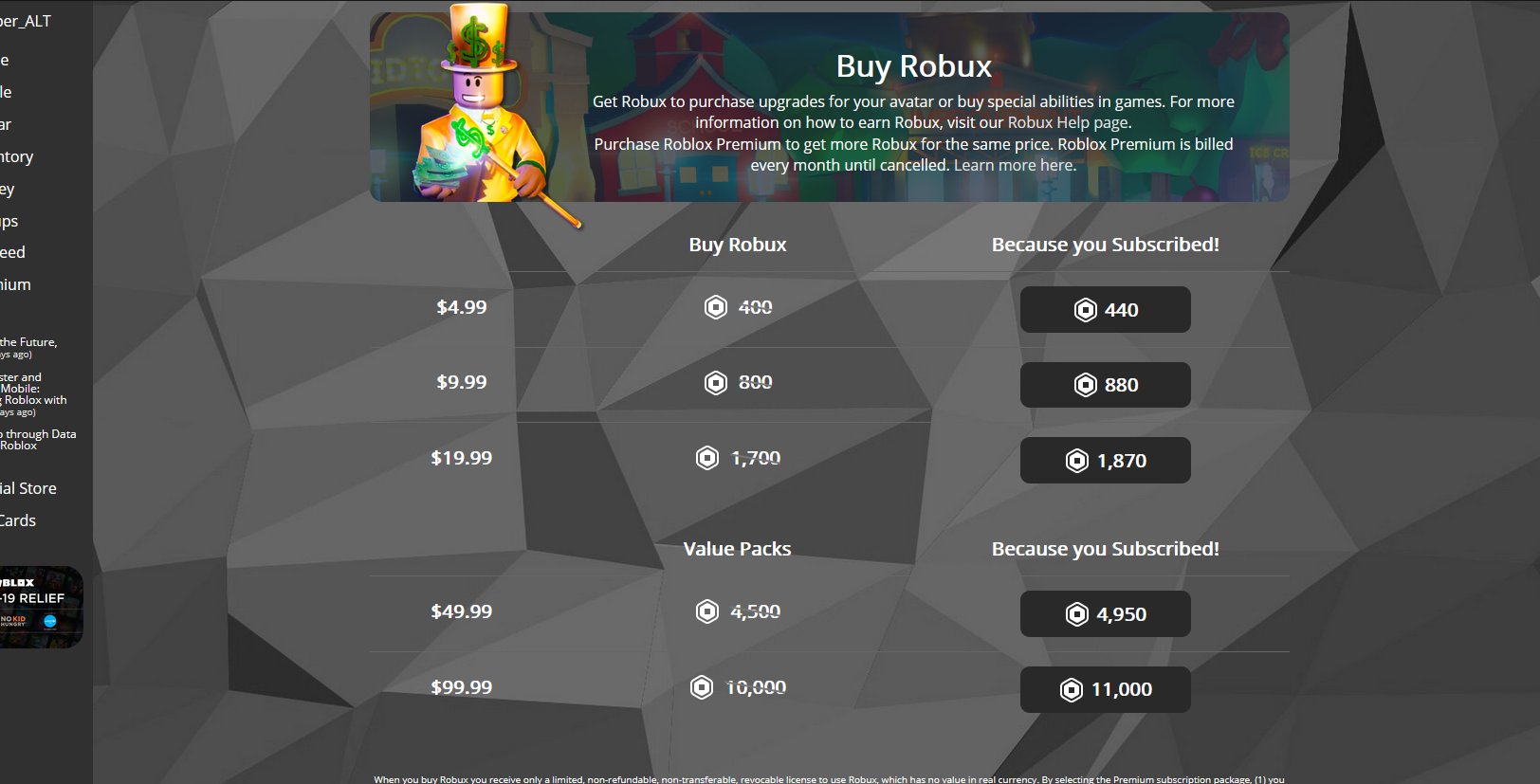

80 Robux Purchases: Unlocking Power, Status, and Virtual Identity in Roblox

Is Clash Royale Truly Pay to Win? Unpacking the Battle Between Cash and Skill

The Power of Apple Music Download: Access Your Favorite Tracks Anytime, Anywhere